Invisible Women: Exposing Data Bias in a World Designed for Men

Invisible Women: Exposing Data Bias in a World Designed for Men

By Caroline Criado Perez

Vintage Digital, 2019 | ISBN-10: 1419729071 | ISBN-13: 978-1419729072

Hardcover: 406 pages

Many people are familiar with the classic symptoms of a heart attack: chest pain and shortness of breath. But what they might not realize is that about two-thirds of women exhibit less-typical symptoms, such as subtle pressure or tightness in the chest, rather than the male presentation of full-blown chest pain. A British cardiology study showed that women experience 50% higher rates of heart attack misdiagnosis, with comparatively fewer women surviving the first attack. Historically, it was assumed that diagnosing and caring for women with heart disease could be done by applying the results of research performed on men to women, a one-size-fits-all approach to medical research. What we now know is that approach does not always serve women well.

It was this study of heart attack misdiagnosis in women that prompted Caroline Criado Perez to investigate whether research in other areas was also affected by the lack of differentiating male and female data. In her book Invisible Women: Exposing Data Bias in a World Designed for Men, the author gives countless examples of data discrimination and its effects.

DATA DEPENDENCE

Data is fundamental to our information society. And we are increasingly dependent on data to make critical decisions and allocate resources for economic development, health care, education, transportation, the workplace, and public policy. When data is collected and analyzed without aggregating it by gender, bias and discrimination result. Decision-makers rely on available information, but as Criado Perez points out, up until now men have been treated as the default human being and women as atypical. Women end up paying enormous costs for this bias — in time, money, and even with their lives.

THE STANDARD DEFAULT MALE

It starts with everyday inconveniences such as women freezing in their offices while the default settings for heating and air-conditioning systems are adjusted to men’s higher metabolic rate; where women are not able to reach shelves because they have been placed at a height for the average man; or having to wait in long lines at movies or events because the ladies’ room was not designed with the space needed to accommodate a group of women’s biological needs. Some things are annoying; others may even be life threatening, such as heart attacks or car accidents. For example, most crash-test dummies are modeled on men’s anatomy and cars are built correspondingly. Women are 47% more likely than men to be seriously injured in an accident and 71% more likely to be moderately injured. The probability of a woman dying in a car accident is 17% higher than a man.

In product design and development, prototypes are often built using male body dimensions and requirements. After the launch of the 2018 iPhone®, female customers criticized Apple for creating a phone that was too large for the average woman’s hand. Even in the workplace, men are considered the standard default human being, whether it be in the design of safety clothing, hiring criteria, or guidelines for job promotions. Criado Perez warns that statistical evidence, which may seem objective, can be highly male biased because studies fail to include women’s data in the final analysis, and instead make male attributes the standard.

AI AND A SENSE OF URGENCY IN CLOSING THE GENDER DATA GAP

The lack of data referencing women stands to be exacerbated through technological developments, such as the products of artificial intelligence (AI) and machine learning. Criado Perez contends that “artificial intelligence that helps doctors with diagnoses, scans through CVs, and even conducts interviews with potential job applicants is already common. But these AI algorithms have usually been trained on data sets that are riddled with data gaps — and this biased data is being unwittingly hardwired into the very code to which we’re outsourcing our decision-making.”

Criado Perez argues that although statistics and algorithms are crucial, the human experience is equally important. If the world in which we live is to work for everyone, we need women to not only be considered, but also be a part of the conversation. The failure to include women’s perspectives, as well as their data, serves as a driver of unintended male bias attempting to pass as “gender neutral” information.

BEYOND THE GENDER DATA GAP

The gender data gap is a result of not collecting data about women, and then when it is collected, not aggregating it according to gender. Criado Perez breaks things down even further, explaining that “when it comes to women of color, disabled women, working-class women, the data is practically nonexistent. Not because it isn’t collected, but because it is not separated from the male data.” The author reminds us that failing to collect data on women allows discrimination to continue, and that this discrimination remains invisible because it is not accounted for in the data.

The gender data gap is a result of not collecting data about women, and then when it is collected, not aggregating it according to gender. Criado Perez breaks things down even further, explaining that “when it comes to women of color, disabled women, working-class women, the data is practically nonexistent. Not because it isn’t collected, but because it is not separated from the male data.” The author reminds us that failing to collect data on women allows discrimination to continue, and that this discrimination remains invisible because it is not accounted for in the data.

Invisible Women stresses the importance of women being counted and visible through the inclusion of their data. The author takes issue with what she refers to as the “insidious myth of meritocracy” — where the merits are associated with typical male attributes. She underscores “the attractiveness of a myth that tells the very people who benefit from it that all their achievements are a product of their own personal merit.” She points out the fallacy of people’s belief in meritocracy as not only the way things should work, but as the way things do work — even when they don’t.

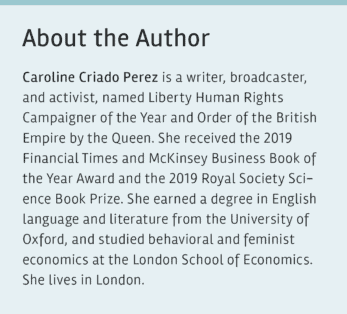

Rishelle Wimmer is a senior lecturer in the information technology and systems management department of the FH Salzburg University of Applied Sciences. She studied operation research and system analysis at Cornell University and holds a master’s degree in educational sciences from the University of Salzburg. She serves on the SWE editorial board and the research advisory council and has been the faculty advisor for the Salzburg SWE affiliate since FY17.

“Invisible Women: Exposing Data Bias in a World Designed for Men” was reviewed by Rishelle Wimmer, SWE Editorial Board. This article appears in the 2020 spring issue of SWE Magazine.

Read more from the 2020 spring issue of SWE Magazine:

- Feature: Toward More Accessible Work Environments

- Feature: Engineering for Good

- Feature: Inclusive Design for Living Longer

- Feature: Women Engineers You Should Know

- SWE Forum: From Congressional Visits to the “New Normal” Brought by COVID-19

- Opening Thoughts: Access: A Matter of Human Rights

- News & Advocacy: COVID-19: STEM Strikes Back

- News & Advocacy: Momentum, Authenticity, and Pivoting: The State of Women in Politics

- News & Advocacy: Visiting Congress During a Global Health Crisis

- News & Advocacy: People

- Career Pathways: Shelter-at-Home Orders Put Spotlight on Disability Accommodations

- President’s Note: Joy and Relevance in the SWE Mission

- Life and Work: Is Sitting the New Smoking?

- Reinvention: From Fixing up My Home to Helping Others Construct Theirs

- Viewpoint: Then and Now: Personal Reflections on Accessibility

- In Memoriam: Marta Kindya, 1946-2019

- Closing Thoughts: Community in Times of Crisis

- Scrapbook: Announcing a New Arrival

Author

-

Rishelle Wimmer is a senior lecturer in the information technology and systems management department of the FH Salzburg University of Applied Sciences. She studied operation research and system analysis at Cornell University and holds a master’s degree in educational sciences from the University of Salzburg. She currently serves on the SWE editorial board and the research advisory committee and has been the faculty advisor for the Salzburg SWE affiliate since FY17.